OK, now I get it. Thanks for being patient with me

I had a quick look and I could see what I was referring to in it.

Following on from Ricks post, another nice touch, I’ve heard of them before, is also in the global embeds.

So where you have the Global Objects, you also have the Field Level Validation where automatic totaling of fields can take place before an insert or update, formatting of string fields etc etc.

It is a place to code extended Validity Checks like the tab of the same name for a field found in the dct, but you have you more control over the field level validation and you can interact with other files and variables outside of the table.

I like this because I dont have to worry about copy code from a form to a process or if I’m using multiple forms to update files, this global embed area is a code once reuse many times area.

Simpler than LOGOUT / COMMIT you can use STREAM / FLUSH with a TPS file.

This will speed up adding or updating 100s of records without the transaction overhead. It locks the file just like LOGOUT.

Read the Help for more details.

Batch Processing Performance

When writing a large number of records, use STREAM() or open the file in a deny write mode, that is, OPEN(file) rather than SHARE(file). After the records have been written, call FLUSH() to allow other users access.

It is very important to use STREAM() when ADDing/APPENDing/PUTting a large number of records. STREAM() will typically make processing about 20 times faster. For example, adding 1000 records might take nearly 2 minutes without STREAM(), but only 5 seconds with STREAM.

It is not necessary to use STREAM() or FLUSH() on a logged out file (performance on logged out files is always good).

STREAM has the effect of LOCKing the file.

… There is more in this topic that covers LOGOUT and TopSpeed …

I have added in some error checking:

Access:sgCategory.Init() ! Initialize if ErrorCode() then Message('Init: ' & ERROR()) . IF Access:sgCategory.Open() then Message('Open: ' & ERROR()) . ! Open the table Access:sgCategory.UseFile() ! Use the table if ErrorCode() then Message('UseFile: ' & ERROR()) . Access:sgCategory.ClearKey(sca:sgCategoryPK) ! Assure all Key fields have lowest value ! sca:sgCategoryPK = sca:CompanyID + sca:CategoryID SET(sca:sgCategoryPK,sca:sgCategoryPK) ! Choose the PK index LOGOUT(30,sgCategory) ! Speed up transactions? LOOP i = 1 TO RANDOM(50,250) ! Test values loop sca:CategoryID = i sca:CompanyID = glo:coID ! Global value for current company if Access:sgCategory.Fetch(sca:sgCategoryPK) = Level:Benign then ! Found !// Update the date modified, leave the other fields alone sca:DateModified = TODAY() ! Todays date sca:TimeModified = CLOCK() ! Now IF Access:sgCategory.Update() then Message('Update: ' & ERROR()) . ! Update the record else ! Not found !// Insert a new record and update its fields where applicable !Access:sgCategory.PrimeRecord() ! Used if there are autonumber fields sca:CompanyID = glo:coID sca:CategoryID = i sca:Description = 'Category ' & i 0{PROP:StatusText,1} = 'Category ' & i !sca:DefaultYN = False sca:DateModified = TODAY() ! Todays date sca:TimeModified = CLOCK() ! Now sca:DateCreated = TODAY() sca:TimeCreated = CLOCK() !DISPLAY IF Access:sgCategory.Insert() then Message('Insert: ' & ERROR()) . ! Insert the record end END ! Test values loop COMMIT() ! Make sure the data is all written to the table if ErrorCode() then Message('Commit: ' & ERROR()) . IF Access:sgCategory.Close() then Message('Close: ' & ERROR()) . ! Close the table

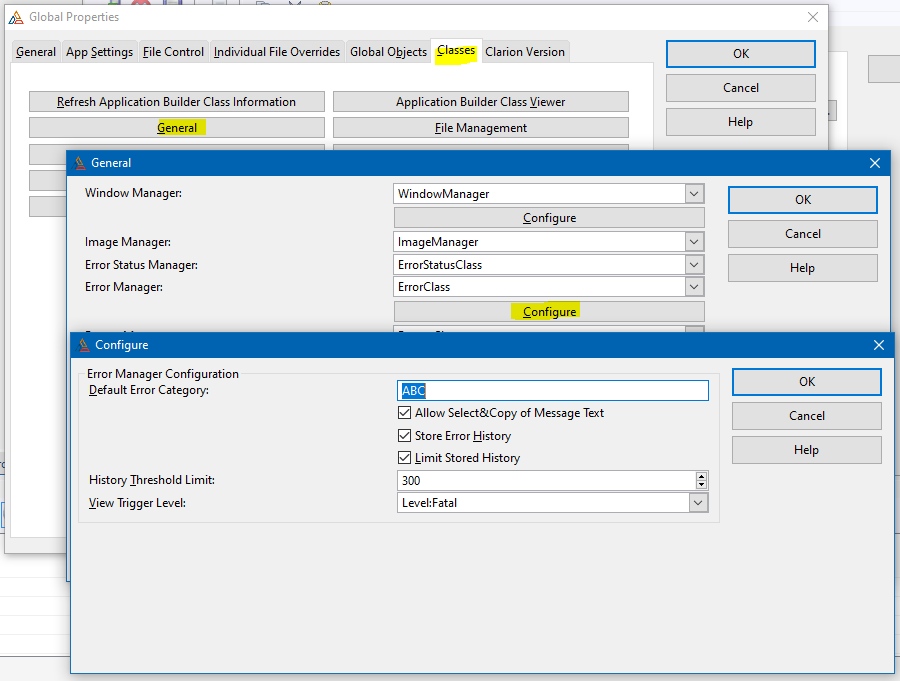

I have also gone to the Global Properties and made the following changes, as suggested in Bruce’s ABC book:

I’m not entirely sure whether this is necessary. Comments?

I don’t think using Error() and ErrorCode() with ABC methods is reliable. Each File Manager has an Error Class as Access::File.Errors… with methods to get the last error that would be checked based on the return value of the last call indicating failure.

Thanks Carl, I wondered why I was getting some bogus errors.

I have created a public procedure:

Errs PROCEDURE (STRING context,SHORT errorno) ! Declare Procedure CODE !// errorno is the error number from Access:File.GetError() ! context is the message passed to indicate which table and activity caused the error if errorno <> 0 then ! we have an error ! Show the error in the debugger ud.debug('--> ' & clip(context) & ': ' & errorno & ' ' & ERROR()) ! Display the error as an on-screen message Message(clip(context) & ': ' & errorno & ' ' & ERROR(),'File Manager Error') end ! we have an error

Now my test code looks like this, which is a bit more readable:

Access:sgCategory.Init() ! Initialize Errs('Cat.Init',Access:sgCategory.GetError()) IF Access:sgCategory.Open() then Errs('Cat.Open',Access:sgCategory.GetError()) . ! Open the table Access:sgCategory.UseFile() ! Use the table Errs('Cat.UseFile',Access:sgCategory.GetError()) Access:sgCategory.ClearKey(sca:sgCategoryPK) ! Assure all Key fields have lowest value Errs('Cat.ClearKey',Access:sgCategory.GetError()) ! sca:sgCategoryPK = sca:CompanyID + sca:CategoryID SET(sca:sgCategoryPK,sca:sgCategoryPK) ! Choose the PK index Errs('Cat.SET',Access:sgCategory.GetError()) LOGOUT(30,sgCategory) ! Speed up transactions? LOOP i = 1 TO RANDOM(50,250) ! Test values loop sca:CategoryID = i sca:CompanyID = glo:coID ! Global value for current company if Access:sgCategory.Fetch(sca:sgCategoryPK) = Level:Benign then ! Found !// Update the date modified, leave the other fields alone sca:DateModified = TODAY() ! Todays date sca:TimeModified = CLOCK() ! Now IF Access:sgCategory.Update() then Errs('Cat.Update',Access:sgCategory.GetError()) . ! Update the record else ! Not found !// Insert a new record and update its fields where applicable !Access:sgCategory.PrimeRecord() ! Used if there are autonumber fields sca:CompanyID = glo:coID sca:CategoryID = i sca:Description = 'Category ' & i 0{PROP:StatusText,1} = 'Category ' & i sca:DefaultYN = False sca:DateModified = TODAY() ! Todays date sca:TimeModified = CLOCK() ! Now sca:DateCreated = TODAY() sca:TimeCreated = CLOCK() IF Access:sgCategory.Insert() then Errs('Cat.Insert',Access:sgCategory.GetError()) . ! Insert the record end END ! Test values loop COMMIT() ! Make sure the data is all written to the table Errs('Cat.Commit',Access:sgCategory.GetError()) IF Access:sgCategory.Close() then Errs('Cat.Close',Access:sgCategory.GetError()) . ! Close the table

Thank you once again for patiently helping me to learn and get things right, before I learn bad habits.

@DonnEdwards All of the file manager methods you are call will post an error message already (except for Fetch). You don’t need to call Message separately.

If you want to take control of the messaging, then change your code to use the TRY version of the methods (TryOpen, TryUpdate, etc). These will return to your code without displaying a message and let you do what you want re: the error.

I think you might be right, I had a quick look and couldnt see it in the pdf’s anywhere.

@DonnEdwards If you look inside the some of the ABC class files, there should be some examples in there on how to throw error messages. This way if you do use the silent TRYxyz methods, you can then throw your own messages. I thought there was some examples in Russ Egens object books but I couldnt see any.

In addition to that, ERRORCODE() is often not useful when used after a non-rtl procedure call.

It is highly possible that any ERRORCODE() you receive could be completely irrelevant to the thing you care about, because that procedure could call other RTL functions that might trigger their own un-related ERRORCODE().

I was getting a false positive when using Clearkey, which GetError does not give.

So should I drop all the “errs” checking and just do the TRYxxx instead?

I’ve always used the try versions of the methods and handle the errors in my own way

It’s up to you. I generally allow the default error messages to display unless I specifically want to control something.

I’ll add this comment as I’ve been looking at ways to manipulate this Global Embeds %FieldLevelValidation because its something I happen to be working on whilst trying to balance the need to cater for future updates with databases, in particular MS SQL server with new fields.

This applies to all SQL Drivers except Oracle, when using the /TurboSQL switch in a dct file/table driver string, on a SQL server table, point being, the /TurboSQL switch does not check all the field columns in the dct & clarion app match the SQL Server.

This lets you run different apps, lets call them CurrentApp and NewApp on the same database provided the new fields for NewApp are added to the end of the list of the field columns. This is useful for testing NewApp in production or live, side by side with the CurrentApp, think of it like the final stage of testing in production, without having to a do a company wide upgrade for all users, but a final shake down by power users before the users get the app.

The other issue is using the ABC Filemanager Validity Checks.

Just for reference all the embed code added to the global embeds Field Level Validation can be found in the Clarion Debugger’s bottom left window pane, where you will be looking in the Procedure column Hide:Access: < DctFilename > .ValidateFieldServer(Long,Byte).

The way ValidateFieldServer works in the ABC file manager is each field is assigned a number and it starts at 1 and loops through each field looking for any global embed code added to the Field Level Validation embed for that field.

The order is based on the order seen in the dct, so if your first field in the dct is the record/row ID, and if there is any Field Level Validation embed code, this will run first.

This Field Level Validation is where you can create functions to do your own autonumbering or call a SQL procedure to return a value, provided the dct primary key for this field has autonumbering switched OFF. IF you leave AutoNumber on the primary key switched ON, then the built in ABC filemanager autonumbering will still take place. [Edit] And you dont have to restrict your use of calling a stored procedure to just autonumbering tasks either.

But I have another scenario I use this Field Level Validation for. I create display string fields in the dct and use these instead of using the input fields as it makes formatting in browse list boxes and reports easier and quicker to use and I reduce the amount of coding I have to do across multiple windows and reports as I dont need to copy embed code from one browse list box to another browse list box or from one report to another report etc etc. Fully Qualified member of the Lazy Programmers Club!

So in a typical invoice scenario, on one or more update forms, I would have the Qty field and I also have a separate file called Units, where the user can choose the unit which corresponds best to the Qty field.

So the users can specify 1 and Kilos or 15 and Lbs (pounds) or 30 and Mg or 50 and Length, or 3 and Pallet in the form, and in the browse I just display a single QtyDisplayString as this makes column sorting work better than having two columns showing Qty and Units. If I use the Qty and Units in the list box, and click on the Qty field, this will group all the 1’s and all the units where 1 has been sold, but by using the QtyDisplayString and sorting on this column I get to sort on the Qty and then the Unit just by clicking on the column once. I know we can hold down the Ctrl Key and do a multi column sort, but the user’s tend to forget this if they are occasional users of this functionality. [Edit] I know this isnt a good example because its possible to apply special column sorting in a browse, but I dont just use one display string field, sometimes I have in the dct and use two display string fields, mainly for reports as those columns are not resizable so I need to perform line wrapping functionality over two display string fields, but I also use this global embed field level validation to perform Evalute(Prefix:FieldContainingUserDefinedRuntimeFormulas) and other fancy stuff which isnt listed here, which includes formatting.

Now the QtyDisplayString is filled and formatted automatically by adding some code to this fields, field level validation global embed eg

Prefix:QtyDisplayString = Clip(Left(Prefix:Qty)) &' '& Clip(Left(OtherFilePrefix:UnitDescription))

To get the correct OtherFilePrefix:UnitDescription, either add OtherFile to the Update Form(s) or Process(es), Table Schematic Window, Other Tables section where it will be opened and closed for you as per the normal Form or Process procedure rules or you can create an alias of this file/table in the dct and create a function which accepts the Prefix:UnitID and returns a string back to the QtyDisplayString field level validation embed so the code would look like

Prefix:QtyDisplayString = Clip(Left(Prefix:Qty)) &' '& Clip(Left(RtnAliasUnitFileUnitDescription(Prefix:UnitID)))

Equally this could simply be a function returning some sort of autonumbering value instead of using the ABC filemanger AutoNumber option in the dct file’s primary key.

But the point is, the order these fields are listed in the dct is the order any global field level validation embed code takes place in.

So in the dct you dont want the QtyDisplayString listed before the Qty and UnitID field, you want the QtyDisplayString field after the Qty and UnitID field, otherwise you could find calculations and string formatting taking place in the wrong order. And this is where when using the /TurboSQL switch things can get tricky because you might have field level validation code in the Old App conflicting with new fields in the NewApp and you want a new field to not be at the end of the list for the /TurboSQL benefits but you need the NewApp to modify some existing field in the CurrentApp.

So the TLDR is just be aware the order the fields appear in the dct is the order any global embed code field level validation code will be processed in and this could impact updates when running old and new versions of an app in production/live when using the /TurboSQL switch and run CurrentApp and NewApp side by side as the last stage of production testing.

The other benefit of using this Global Embed Field Level Validation, is it massively reduces the amount of embed code in various windows and reports, and as the app grows and the number of users grow, some of this code is already isolated and abstracted enough to then become a stored procedure in the SQL to further speed things up.

Does that make any sense whatsoever? ![]()